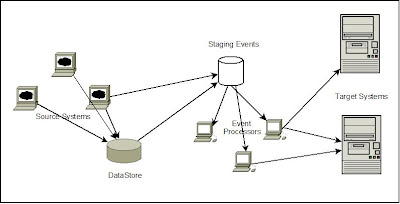

The Definition from wiki states “Event-driven architecture (EDA) is a software architecture pattern promoting the production, detection, consumption of, and reaction to events.” Any system which requires updating instantly any of its state changes to multiple systems, can implement a system on the Event Driven Architecture.

A lot of caution and understanding is required to design a highly scalable Event Driven Solutions. I have tried to put together the various considerations required at multiple levels for implementing a scalable event driven system. The key difference between a normal Data driven and Event driven architecture is that the events are the input and loss of an event can cause loss of Data whereas Data driven will always have data available for later processing.

Source of Events

As mentioned earlier the events are the key inputs for the system and they can be from multiple systems. We need to finalize on the formatting of these events to a common format, which can help us in developing a core processing layer to process all these updates. Let’s take an example of an order processing system. The Order could be from multiple sources and they all need to be first formatted to a common Single format (can be an XML, JSon, OR even a Plain POJO objects). The transformation of these events to a common format will ensure we have a core processing layer for processing these events irrespective of the type OR source of the events. This will mean in most cases to serialize the Data and hence we will need to consider on the transformation time and memory required while choosing the Common Data Format.

Staging the Events

The load or the rate of events on most of the event driven applications will not be consistent and will have a Peak and Lean period (and can be different for multiple Source as well). To have a control on the processing of these events, it will be very very crucial to stage these events to an intermediate store. The events from source will need to be formatted to the common format and then will need to be stored in a Queue (Can be a JMS Queue/ Database or any other Storage mechanism). Based on the actual system’s requirement we can either have this intermediate storage of events to be either persistent or not. Persistent storage of these events will ensure any crash of the application will not result in the loss of the events and will be queued until the application is up again. The stating of the events can be also helpful in cases of transactional data, where the queuing of these events can ensure processing of these parallel updates in sequence.

Common Event Processor

The Processing Layer will need to be configured with a Pool of stateless workers for processing the events from the Intermediate storage. In cases where we have more than one type of event, we can have a Factory to return a specific type of processor for each of the event Type. We will require maintaining a Pool of these processors for two specific reasons,

· We need to restrict the max number of parallel events that can be processed based on the system resources. This will be critical as the rate of event flow can be variable and we need to have some control on the rate of processing them else the system could easily crash.

· In most cases the intermediate Storage can be external and will require an IO call to read the updates, and can be a costly operation.

The common processors will need to be stateless as we will require reusing these instances for better performance (mainly on handling the I/O calls), and can also easily cluster the processing layer to maintain multiple pools of the processors across multiple systems processing from a single intermediate storage.

Light VS Heavy Events

Each event is expected to hold information on the Change. The processing of a specific event might also require additional information apart from those available on the event itself. The events will need to be designed to hold all required information for the complete processing of the same. In application where there might be multiple handling of a single event, we need to design to hold all common information required for the multiple processing of the same event. As mentioned earlier the serialization of the events to the intermediate storage can be costlier and hence need to be cautious on not overloading the events with too much information, which causes them to be huge, and also not too cautiously have them too light and cause the processor to constantly, hit the master DataStore to pull data for processing the events. Both of these could have an impact on the performance of the overall system and hence need to have the right data on the Event updates.

Multiple VS Single Processor Pool

Most event driven architecture will require multiple handlings required for every event. For example each update might require to be updated to multiple systems and each might required to be formatted for each of the target systems. In this case we can have a separate poll of processors for each of the required event handling and process the same update from the intermediate storage; OR we can have the same processor to perform the multiple processing required for each event. This can be largely influenced by the Design and choice of the Intermediate storage. In cases where a Database or File system is used to stage the events we can have multiple processors to pick them as each of the processor picking the event is independent of the other processor. In cases of a using a JMS Topic and having multiple Processor Pool to listen to these updates on the common topic, can slow the process where the processing of subsequent events will wait for the proper receiving of the events by all the required processor pool. To have a single OR multiple processor pools for processing each update will depend on how the events gets picked by these pools. SO in cases where they are independent of the processing of the subsequent events they can be multiple else its better to have a single processor and then handoff in turn to multiple processors.

Persisting Processed Updates

It might also be a good design decision to persist the processed Events for future reference. This is particular a definite requirement in cases where we expect Duplicate Events and don’t want to process these duplicates. The persistence of the processed events will help us to check on duplicates and ignore them as required. In most cases where the events are updated to external systems, this will help us to reduce unnecessary duplicate updates.

The key for a successful Event Driven Architecture is in the Real time processing/updating of the events to the Target systems and the ability to scale as the volume of the Events increases at real time. Thus including the timestamp on the Events and instrumenting the processor to get the processing time and through put will be very important. The performance and load data can be used to plan the required hardware for our event driven architecture.